If you look at the historical articles on this blog, you will see that about 1 year go I restarted the blog after I got infected with the container virus :) At first, I was using Docker for everything at home since I am running Linux on all of my personal computers.

On my work laptop did not work too much with Docker then as we were not running windows 10 at that time. Around June we received the message that we were going to get an upgrade to Windows 10. The second after I received this message I was super excited:

After the upgrade was finished and I had set up my laptop, of course, the first thing I did was installing the beta version of Docker for Windows and fired up docker! I was extremely happy to now see the familiar responses to the docker commands in my Powershell window instead of my Linux terminal windows.

After the first couple of tests, I started to play around with in my opinion one of the nicest features: mounting a folder from the Windows drive as a data volume. When I got to this point, unfortunately, my excitement decreased a little bit:

Due to some of the security settings on the laptop, I was not able to use the standardized way of mounting my windows folders as data volumes in my containers (which works based on creating a share for each of your physical drives)....

Although I could still make use of data volumes in Docker, I did not have an easy way of working with my files from the host in a docker environment. Even though this was quite frustrating, I still used Docker a lot, for example in the robot with face recognition project mentioned in an earlier post.

After having this problem simmer in my mind for the past couple of months, the other day during my bike ride to a running training I had an epiphany. Instead of connecting from the container to the host system, I could reverse this and connect from the host system to a specially crafted container that would expose a given data volume.

My first step was to start a container based on the dperson/samba image. Unfortunately, this approach did work directly because I could not bind the SMB ports from this container to the host system because Windows was already bound to these ports. So my next step was to solve this challenge. What I needed to do is find a way to allow the host system to make a connection to the container.

The way (and I am not sure if there are other / better ways) I found is to set the networking of the container to host using command line arguments for the docker executable. This will allow you to access the samba server running in this container via an IP (i.e. via the host IP of the mobylinux VM).

If I have data volume myTestVolume that I use in some other container, I can now expose this container by running the following command in my Powershell window:

docker run -d -it --name samba --rm \

--net=host \

-e USERID=0 \

-e GROUPID=0 \

-v myTestVolume:/myTestVolume \

dperson/samba \

-s "myTestVolume;/myTestVolume;yes;no"

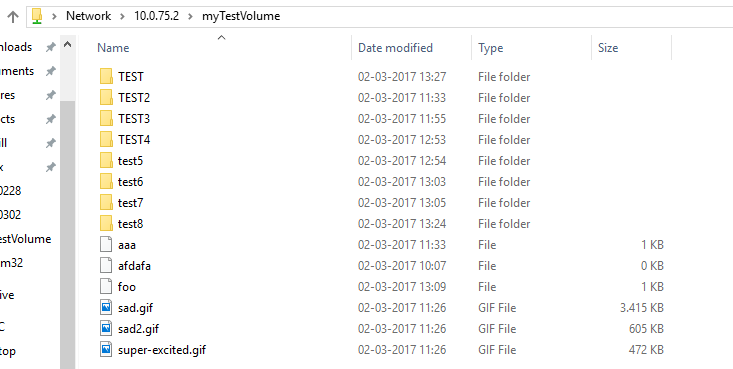

After this container is up and running, I can access the contents of the data volume from within Windows by going to the network path \\10.0.75.2\myTestVolume:

There are still some remarks:

- All files are mapped to root.root since I force all files to be owned by root

- You have to provide one -v argument for each data volume if you want to share multiple data volumes

- Not sure if this is the best or the safest approach, but most importantly it works ;)

For sure this solution is not as easy and as nice as the native approach for mounting windows folders as data volumes provided by Docker for Windows, but I am really happy that at least I have found a workaround for the problem that has been bugging me for the past couple of months!!

If you have another approach, would be happy to hear about it!

Update 2017-08-20: The approach provided in this article requires you to manually specify each of the data volumes yourself. In a follow-up article I show a new Docker image I created that automagically shares all of the data volumes and keeps the list up-2-date also whenever data volumes are created or destroyed.